Sandboxes that automatically analyze files are good tools and aids that can help with malware analysis. However, they should be used with caution, as they often lead to false conclusions, as G DATA malware researcher Karsten Hahn knows. “Many of the criteria are not meaningful for laypersons and are sometimes even misleading,” he says.

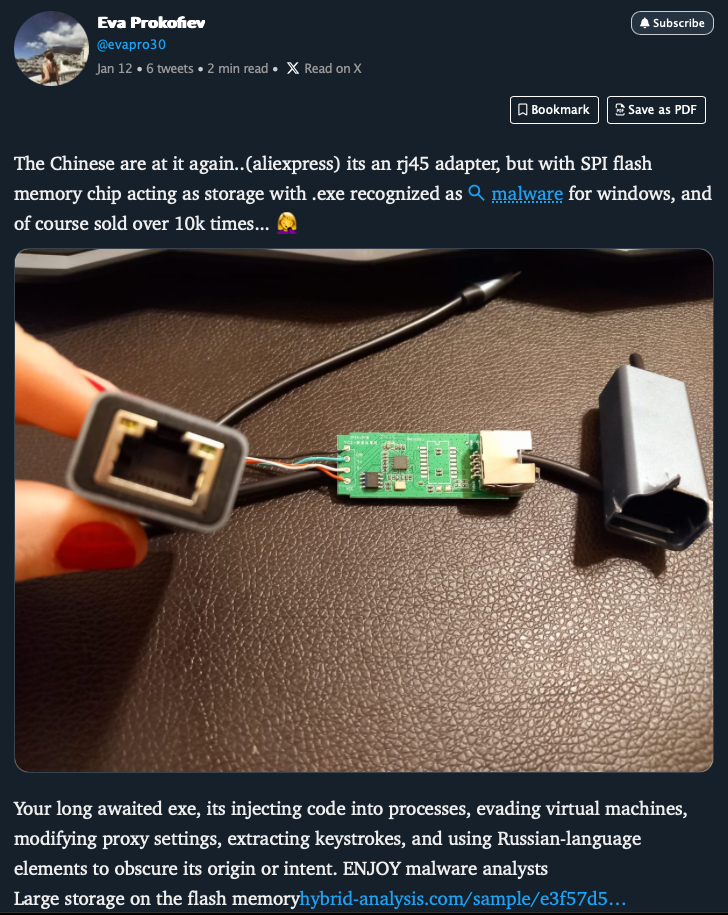

This is exactly what happened in a recent case. According to a tweet, an inexpensive network adapter with a USB-C port contained malware. Attached: a link to a sandbox analysis.

Foregone conclusion

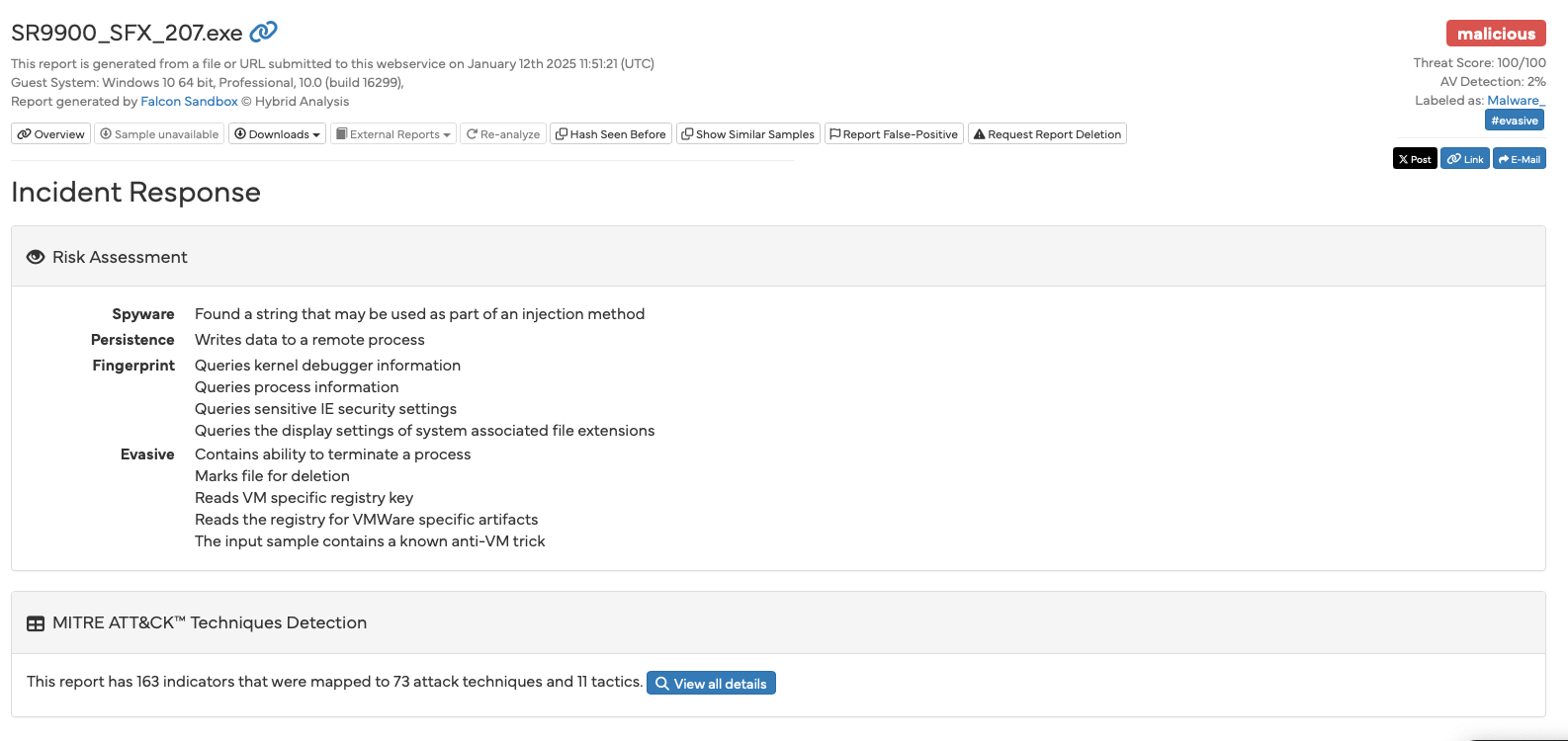

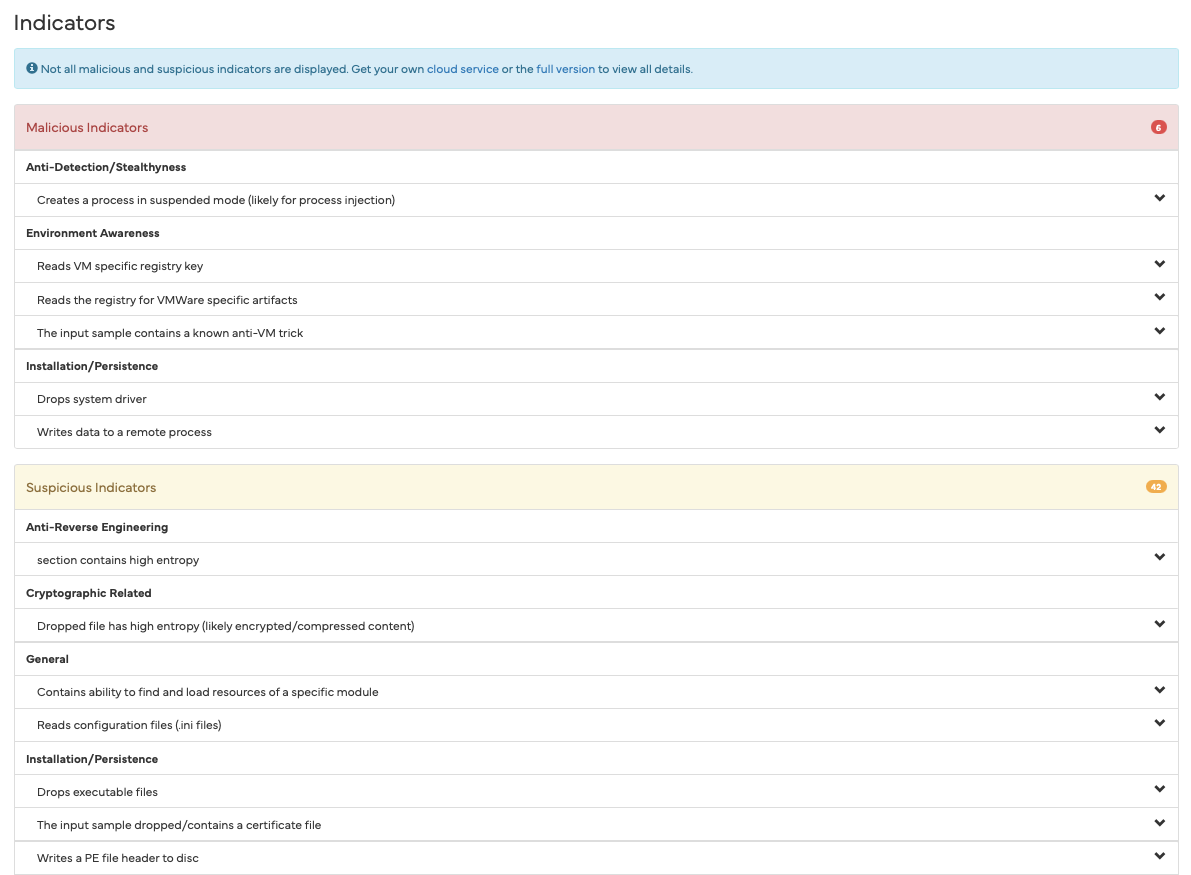

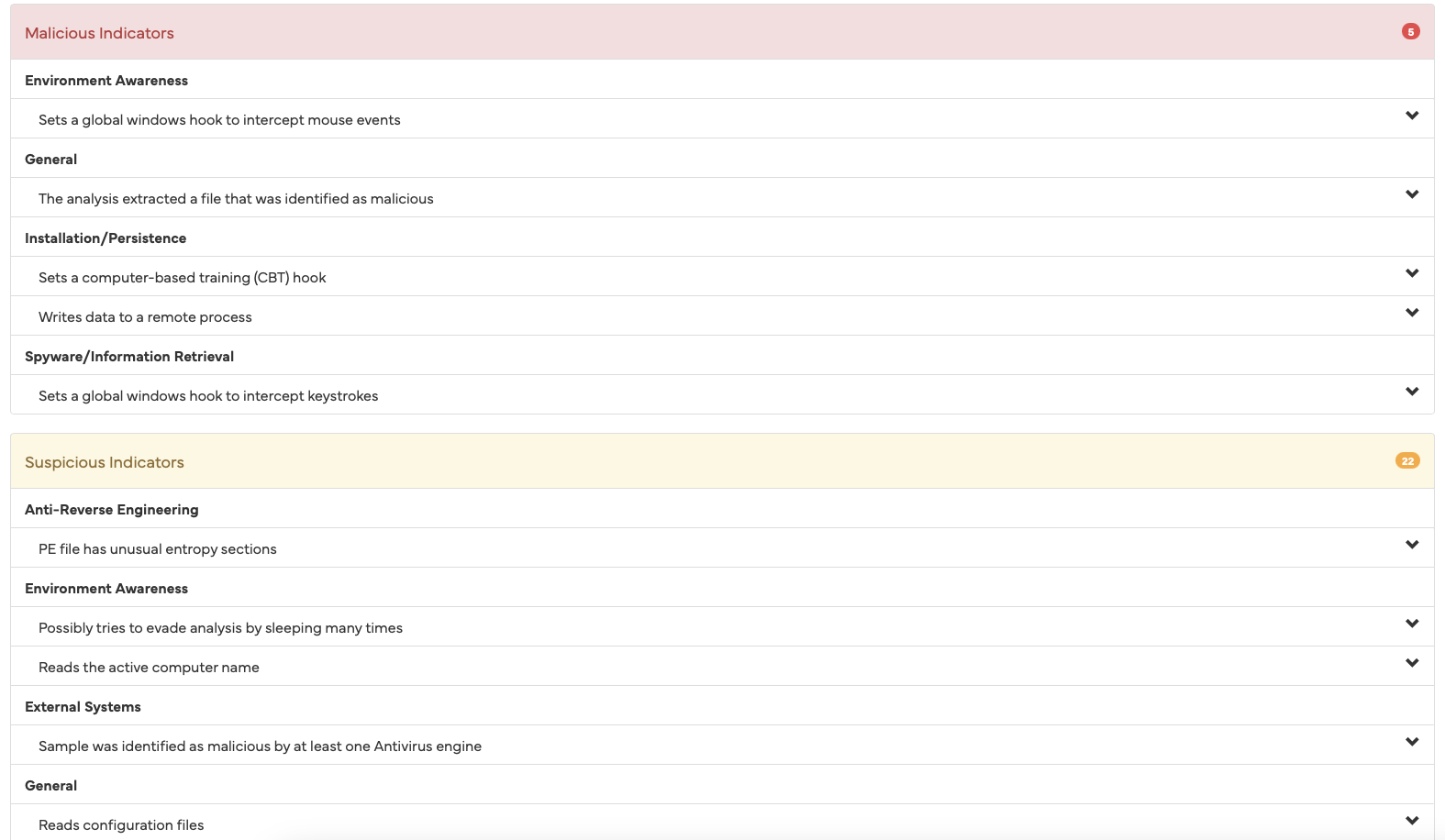

Anyone who only sees these results will potentially come to the conclusion that something is not right here and that the examined file is definitely harmful. The sandbox output is a frightening read. Various criteria are listed one after the other, sorted into categories such as “Suspicious” and “Malicious”.

Among other things, it is rated as “malicious” that the EXE file stored on the device contains a system driver and creates a process that is started in “sleep mode” (“suspended”). The sandbox also found signs that the application is specifically looking for signs that it is running on a virtual machine (VM) and is trying to hide from it.

The fact that the application file can delete files and update the user profile seems at least suspicious to the sandbox. It also senses attempts to prevent reverse engineering by the application also looking for debuggers. Other criteria follow, such as the ability to perform file system actions, such as creating and deleting folders, accessing the registry and much more.

The author concludes that this is clearly malware. Anyone without the required specialist knowledge would - understandably so - come to the same conclusion.

The only problem here is:

This conclusion is completely wrong. This is because criteria have been misinterpreted and not all of the criteria mentioned are suitable for making a statement about whether a file is harmless or malicious.

Context is king

Data from a sandbox must always be embedded within an overall context. Without this context, it is easy to investigate in the wrong direction. This is why an automatic sandbox report is not a suitable replacement for a malware scanner. To illustrate why this is the case, here is a counter-example. Same sandbox, same procedure.

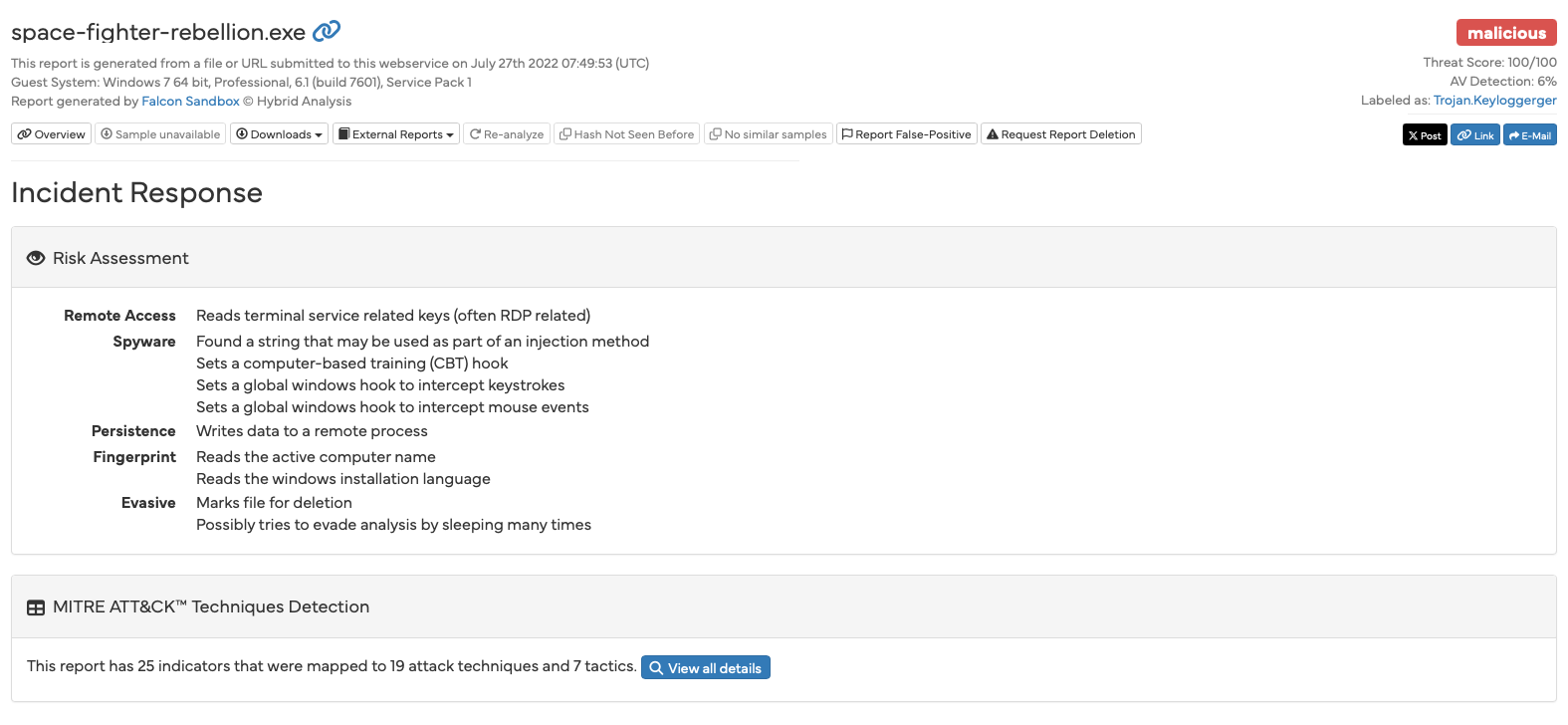

The uploaded file is a game called “Space Fighter Rebellion”. The version that was placed in the sandbox does not contain any malware and is definitely “clean”.

What we have here, however, paints a different picture:

Without a deeper understanding and without further context, we could easily arrive at the conclusion that this is spyware that wants to spy on what we do on the computer. The fact that the program reads our mouse movements as well as our keystrokes would be typical of spyware with a keylogger function.

But if we remember that the file is a game, it may also become clear why keystrokes and mouse movements could be of interest to a program: Without them, you couldn't play the game at all. So we can see that not everything that looks suspicious at first glance actually is. Behind some sinister-sounding descriptions, there is potentially a completely normal and legitimate activity.

What malware is (and isn't)

Malware is also “just software” that performs a specific task on a system and uses the functions provided by the operating system to do so. This fact alone is not surprising - which is why it is important to carefully select the criteria that make a file appear malicious.

To put it in a more tangible example: Feeding a hypothetical alarm system for a house with criteria designed to indicate a burglar, such as “wears shoes”, “has a bag with them” or “has a woolly hat on” or “moves around the home after dark” would not be effective. These criteria would be too general and would apply to so many people with a legitimate reason to stay that this hypothetical system would be completely worthless. Of course, each of these criteria also applies to burglars - but also to very many people who are not burglars. For example, under the above conditions, the homeowner who comes home from work at 7pm on a bitterly cold winter evening and puts his bag down would be recognized as a burglar.

Sandboxes often detect programs that create data backups as malicious and suspect that they are ransomware. This is because they have far-reaching system authorizations and therefore access rights to the file system. They contain encryption components and generate a great deal of file system activity - i.e. creating, moving, copying and deleting files. It is very easy to come to the wrong conclusion here if there is no context.

Fixation error

This case has caused quite a stir, not least due to the current geopolitical situation. And as there have already been reports of devices from Asia being manipulated “ex works” in the past, it was reasonable to assume that this could be another such case. In addition, the person who first reported the alleged malware had no experience in the field of malware analysis. They relied on publicly available tools such as an online sandbox without being able to correctly classify their results.

Once again, an automated sandbox is not a malware scanner - nor does it claim to be. As a layperson, however, it is easy to draw the wrong conclusion. And where the results seem to speak such a clear language, people quickly stop looking for evidence or signs of other possible theories or even actively ignore them. After all, in their own perception, the result is already abundantly clear. Experts refer to this as a fixation error.

With a grain of salt

The classifications and criteria used by sandboxes can lead even experienced malware analysts up the garden path. “What a sandbox considers “malicious” doesn't necessarily mean it is. For me, these are at best “criteria of interest” that are worth a closer look,” says Karsten Hahn. “But I always have to remind myself not to make the criteria of a sandbox my own criteria, because otherwise I would be biased. Which would be bad.”

Finding these interesting criteria is the real strength of a sandbox. It saves work in the analysis, but the results are neither a substitute for an analysis nor a reliable means of confirming or excluding the benignity or malignity of a file.

We can safely assume that the author is pretty embarrassed by this very public faux pas. There was also no shortage of people rubbing salt into the wound and voicing harsh criticism for what at the end of the day was just an an honest mistake.

However, she has definitely learned one thing from this story:

Never trust the judgment of a sandbox.

And we can leave it at that.